Thanks for your reply.

Just to make sure I understand you correctly.

For byte range request, I think you mean to use « fetch byte-range from an object »

Using the Range HTTP header in a GET Object request, you can fetch a byte-range from an object, transferring only the specified portion. …

Typical sizes for byte-range requests are 8 MB or 16 MB. If objects are PUT using a multipart upload, it’s a good practice to GET them in the same part sizes (or at least aligned to part boundaries) for best performance. GET requests can directly address individual parts; for example,

GET ?partNumber=N.

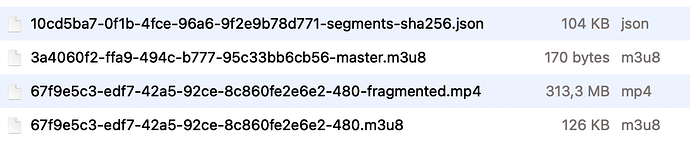

However, I checked my object storage provider does not use a multipart upload. It upload the whole files in to ONE single file.

Or for s3 proxy, do you mean my cloudflare CDN need some configuration?

or do I need to set up something like this post on my server? I saw you also mention, " It seems the range header is not sent to object storage because it returns the entire file every time"

I followed what mentioned in that post thread and used this:

curl 'https://qien.tv' -X OPTIONS -H 'Access-Control-Request-Method: GET' -H 'Access-Control-Request-Headers: range,user-agent' -H 'Origin: https://qien.tv’

it returns with

quote>

I don’t know what shall I do next.

I also found this on wasabi, « How to restrict object access based on HTTP referer header? » In which it says

{

"Version":"2012-10-17",

"Statement":[

{

"Sid":"AllowRequestHTTPHeader",

"Effect":"Allow",

"Principal":"*",

"Action":["s3:GetObject","s3:GetObjectVersion"],

"Resource":"arn:aws:s3:::wasabibucket1/*",

"Condition":{

"StringLike":{"aws:Referer":["http://www.example.com/*","http://example.com/*"]}

}

}

]

}

Please note:

-The web browser must pass along the HTTP header request for this policy to function properly

Do you mean that I should configure cloudflare CDN(my s3 proxy) to correct forwards Range HTTP head to wasabi?

So I should use something like "Cloudflare worker Cloudflare worker to fetch byte request range on initial request "?

I don’t know what shall I do next. ![]()

![]()

Sorry, I can read every word, but I really don’t know what to do. Would you please say more about what shall I do? Thanks.