Hello,

On the one hand, I have a freshly installed peertube instance, on the other hand a Synology NAS. I want to use the latter to backup the former (past experiences have shown me that not having a backup is a bad idea).

So far, my plan, based on this page of the peertube documentation is the following:

- Create a script that executes the following operations

- use pg_dump to generate a dump of the database

- use rsync on the server to copy to the NAS:

- the database dump just created

- the ‹ /var/www/peertube/storage › directory

- the ‹ /var/www/peertube/config › file

- the ‹ /etc/letsencrypt/live/<my_peertube_url>/ › directory

- the ‹ /etc/nginx/sites-available/peertube › directory

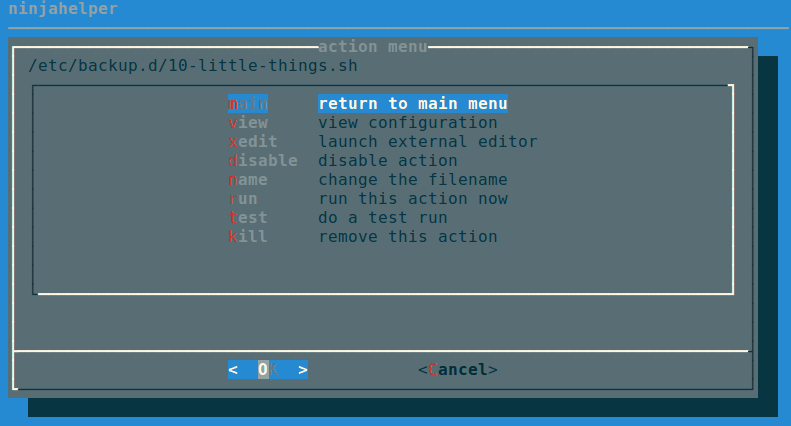

- the few scripts (cron) I have

- Create a cron that executes this script monthly.

In the peertube documentation pg_dump is called after the database is shut down, but from here I think I can run it also while the database is running (I don’t want to shut down the instance for each backup).

My questions are:

- Is my plan sensible or is there an obviously better alternative? (For example, I considered setting up a database replica on the NAS, but since I only want to make a backup, and since if the server crashes I am not in a hurry to fix it, this seems like an overkill compared to pg_dump.)

- Assuming something is changed on the database between the dump and the copy of the storage, I imagine this could lead to an inconsistent database, in particular if a video or a comment is removed between the two. How resilient is the database to this kind of things? Is there a simple way to limit the damages? [Note that I don’t expect a lot of activities on the server, so if I avoid the peak viewing times (that I assume to be the evenings and week-ends in my timezone), this is unlikely to happen at all]

- Some files are unlikely to change much, like the nginx setup. Would it make more sense to back these up only once? If yes, are there other such files?

- This solution doesn’t use anything specific to the Synology NAS. Do you know of something specific to it that would be better suited?

- Have I forgotten anything? (A priori, I don’t plan on making a backup of the systemd config files and startup scripts since I just followed the peertube doc.)

Thanks in advance for your advice.

micropatates