Hello, as I see the Nginx file is deprecated for Docker, in the http2 directive. This may be worth fixing, as version 1.27 is being installed.

postfix-1 | No DKIM private key found for selector 'mail' in domain '<MY'. Generating one now...

webserver-1 | 2025/04/05 21:28:33 [warn] 9#9: the "listen ... http2" directive is deprecated, use the "http2" directive instead in /etc/nginx/conf.d/default.conf:23

webserver-1 | nginx: [warn] the "listen ... http2" directive is deprecated, use the "http2" directive instead in /etc/nginx/conf.d/default.conf:23

webserver-1 | 2025/04/05 21:28:33 [warn] 9#9: the "listen ... http2" directive is deprecated, use the "http2" directive instead in /etc/nginx/conf.d/default.conf:24

webserver-1 | nginx: [warn] the "listen ... http2" directive is deprecated, use the "http2" directive instead in /etc/nginx/conf.d/default.conf:24

webserver-1 | 2025/04/05 21:28:33 [notice] 9#9: using the "epoll" event method

webserver-1 | 2025/04/05 21:28:33 [notice] 9#9: nginx/1.27.4

# Minimum Nginx version required: 1.13.0 (released Apr 25, 2017)

# Please check your Nginx installation features the following modules via 'nginx -V':

# STANDARD HTTP MODULES: Core, Proxy, Rewrite, Access, Gzip, Headers, HTTP/2, Log, Real IP, SSL, Thread Pool, Upstream, AIO Multithreading.

# THIRD PARTY MODULES: None.

server {

listen 80;

listen [::]:80;

server_name ${WEBSERVER_HOST};

location /.well-known/acme-challenge/ {

default_type "text/plain";

root /var/www/certbot;

}

location / { return 301 https://$host$request_uri; }

}

upstream backend {

server ${PEERTUBE_HOST};

}

server {

listen 443 ssl http2;

listen [::]:443 ssl http2;

server_name ${WEBSERVER_HOST};

access_log /var/log/nginx/peertube.access.log; # reduce I/0 with buffer=10m flush=5m

error_log /var/log/nginx/peertube.error.log;

##

# Certificates

# you need a certificate to run in production. see https://letsencrypt.org/

##

ssl_certificate /etc/letsencrypt/live/${WEBSERVER_HOST}/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/${WEBSERVER_HOST}/privkey.pem;

location ^~ '/.well-known/acme-challenge' {

default_type "text/plain";

root /var/www/certbot;

}

##

# Security hardening (as of Nov 15, 2020)

# based on Mozilla Guideline v5.6

##

ssl_protocols TLSv1.2 TLSv1.3;

ssl_prefer_server_ciphers on;

ssl_ciphers ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256; # add ECDHE-RSA-AES256-SHA if you want compatibility with Android 4

ssl_session_timeout 1d; # defaults to 5m

ssl_session_cache shared:SSL:10m; # estimated to 40k sessions

ssl_session_tickets off;

# HSTS (https://hstspreload.org), requires to be copied in 'location' sections that have add_header directives

#add_header Strict-Transport-Security "max-age=63072000; includeSubDomains";

##

# Application

##

location @api {

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

client_max_body_size 100k; # default is 1M

proxy_connect_timeout 10m;

proxy_send_timeout 10m;

proxy_read_timeout 10m;

send_timeout 10m;

proxy_pass http://backend;

}

location / {

try_files /dev/null @api;

}

location ~ ^/api/v1/videos/(upload-resumable|([^/]+/source/replace-resumable))$ {

client_max_body_size 0;

proxy_request_buffering off;

try_files /dev/null @api;

}

location ~ ^/api/v1/users/[^/]+/imports/import-resumable$ {

client_max_body_size 0;

proxy_request_buffering off;

try_files /dev/null @api;

}

location ~ ^/api/v1/videos/(upload|([^/]+/studio/edit))$ {

limit_except POST HEAD { deny all; }

# This is the maximum upload size, which roughly matches the maximum size of a video file.

# Note that temporary space is needed equal to the total size of all concurrent uploads.

# This data gets stored in /var/lib/nginx by default, so you may want to put this directory

# on a dedicated filesystem.

client_max_body_size 12G; # default is 1M

add_header X-File-Maximum-Size 8G always; # inform backend of the set value in bytes before mime-encoding (x * 1.4 >= client_max_body_size)

try_files /dev/null @api;

}

location ~ ^/api/v1/runners/jobs/[^/]+/(update|success)$ {

client_max_body_size 12G; # default is 1M

add_header X-File-Maximum-Size 8G always; # inform backend of the set value in bytes before mime-encoding (x * 1.4 >= client_max_body_size)

try_files /dev/null @api;

}

location ~ ^/api/v1/(videos|video-playlists|video-channels|users/me) {

client_max_body_size 6M; # default is 1M

add_header X-File-Maximum-Size 4M always; # inform backend of the set value in bytes before mime-encoding (x * 1.4 >= client_max_body_size)

try_files /dev/null @api;

}

##

# Websocket

##

location @api_websocket {

proxy_http_version 1.1;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_pass http://backend;

}

location /socket.io {

try_files /dev/null @api_websocket;

}

location /tracker/socket {

# Peers send a message to the tracker every 15 minutes

# Don't close the websocket before then

proxy_read_timeout 15m; # default is 60s

try_files /dev/null @api_websocket;

}

# Plugin websocket routes

location ~ ^/plugins/[^/]+(/[^/]+)?/ws/ {

try_files /dev/null @api_websocket;

}

##

# Performance optimizations

# For extra performance please refer to https://github.com/denji/nginx-tuning

##

root /var/www/peertube/storage;

# Enable compression for JS/CSS/HTML, for improved client load times.

# It might be nice to compress JSON/XML as returned by the API, but

# leaving that out to protect against potential BREACH attack.

gzip on;

gzip_vary on;

gzip_types # text/html is always compressed by HttpGzipModule

text/css

application/javascript

font/truetype

font/opentype

application/vnd.ms-fontobject

image/svg+xml

application/xml;

gzip_min_length 1000; # default is 20 bytes

gzip_buffers 16 8k;

gzip_comp_level 2; # default is 1

client_body_timeout 30s; # default is 60

client_header_timeout 10s; # default is 60

send_timeout 10s; # default is 60

keepalive_timeout 10s; # default is 75

resolver_timeout 10s; # default is 30

reset_timedout_connection on;

proxy_ignore_client_abort on;

tcp_nopush on; # send headers in one piece

tcp_nodelay on; # don't buffer data sent, good for small data bursts in real time

# If you have a small /var/lib partition, it could be interesting to store temp nginx uploads in a different place

# See https://nginx.org/en/docs/http/ngx_http_core_module.html#client_body_temp_path

#client_body_temp_path /var/www/peertube/storage/nginx/;

# Bypass PeerTube for performance reasons. Optional.

# Should be consistent with client-overrides assets list in client.ts server controller

location ~ ^/client/(assets/images/(icons/icon-36x36\.png|icons/icon-48x48\.png|icons/icon-72x72\.png|icons/icon-96x96\.png|icons/icon-144x144\.png|icons/icon-192x192\.png|icons/icon-512x512\.png|logo\.svg|favicon\.png|default-playlist\.jpg|default-avatar-account\.png|default-avatar-account-48x48\.png|default-avatar-video-channel\.png|default-avatar-video-channel-48x48\.png))$ {

add_header Cache-Control "public, max-age=31536000, immutable"; # Cache 1 year

root /var/www/peertube;

try_files /storage/client-overrides/$1 /peertube-latest/client/dist/$1 @api;

}

# Bypass PeerTube for performance reasons. Optional.

location ~ ^/client/(.*\.(js|css|png|svg|woff2|otf|ttf|woff|eot))$ {

add_header Cache-Control "public, max-age=31536000, immutable"; # Cache 1 year

alias /var/www/peertube/peertube-latest/client/dist/$1;

}

location ~ ^(/static/(webseed|web-videos|streaming-playlists/hls)/private/)|^/download {

# We can't rate limit a try_files directive, so we need to duplicate @api

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_limit_rate 5M;

proxy_pass http://backend;

}

# Bypass PeerTube for performance reasons. Optional.

location ~ ^/static/(webseed|web-videos|redundancy|streaming-playlists)/ {

limit_rate_after 5M;

set $peertube_limit_rate 5M;

# Use this line with nginx >= 1.17.0

limit_rate $peertube_limit_rate;

# Or this line with nginx < 1.17.0

# set $limit_rate $peertube_limit_rate;

if ($request_method = 'OPTIONS') {

add_header Access-Control-Allow-Origin '*';

add_header Access-Control-Allow-Methods 'GET, OPTIONS';

add_header Access-Control-Allow-Headers 'Range,DNT,X-CustomHeader,Keep-Alive,User-Agent,X-Requested-With,If-Modified-Since,Cache-Control,Content-Type';

add_header Access-Control-Max-Age 1728000; # Preflight request can be cached 20 days

add_header Content-Type 'text/plain charset=UTF-8';

add_header Content-Length 0;

return 204;

}

if ($request_method = 'GET') {

add_header Access-Control-Allow-Origin '*';

add_header Access-Control-Allow-Methods 'GET, OPTIONS';

add_header Access-Control-Allow-Headers 'Range,DNT,X-CustomHeader,Keep-Alive,User-Agent,X-Requested-With,If-Modified-Since,Cache-Control,Content-Type';

}

# Enabling the sendfile directive eliminates the step of copying the data into the buffer

# and enables direct copying data from one file descriptor to another.

sendfile on;

sendfile_max_chunk 1M; # prevent one fast connection from entirely occupying the worker process. should be > 800k.

aio threads;

# web-videos is the name of the directory mapped to the `storage.web_videos` key in your PeerTube configuration

rewrite ^/static/webseed/(.*)$ /web-videos/$1 break;

rewrite ^/static/(.*)$ /$1 break;

try_files $uri @api;

}

}

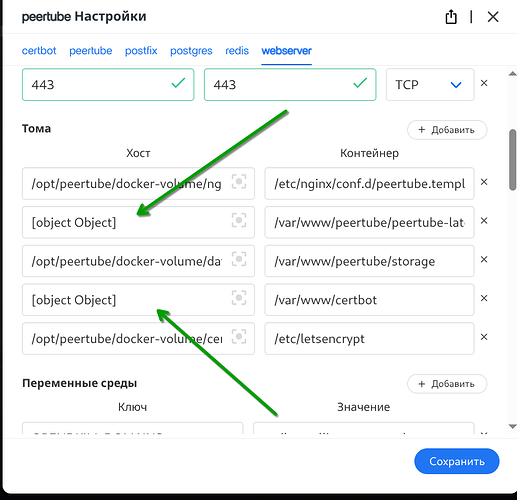

- How do I see the user files are in the host system, but I don’t see the PeerTube configuration files, is it in a container somewhere?

ls /root/docker-volume/data

avatars original-video-files thumbnails

bin plugins tmp

cache previews tmp-persistent

captions redundancy torrents

client-overrides storyboards web-videos

logs streaming-playlists well-known

In the container as on the host config is empty

ls

CHANGELOG.md FAQ.md SECURITY.md config package.json server tsconfig.eslint.json

CODE_OF_CONDUCT.md LICENSE apps dist packages support yarn.lock

CREDITS.md README.md client node_modules scripts tsconfig.base.json

root@4b0cf0711ea6:/app# ls /config

root@4b0cf0711ea6:/app#

- I also didn’t understand how to configure smtp mail, I get a timeout. I configured as in the usual config(classic installation), smtp server, login password, opened ports in the container 25 and 587 (probably in vain, still did not help) but I get

[angeltales.angellife.ru:443] 2025-04-05 23:14:21.013 error: Connection timeout {

"component": "smtp-connection",

"sid": "2QHEkjLdZ0E"

}

[angeltales.angellife.ru:443] 2025-04-05 23:14:21.016 error: Failed to connect to SMTP mail.angelka.ru:587. {

"err": {

"stack": "Error: Connection timeout\n at SMTPConnection._formatError (/app/node_modules/nodemailer/lib/smtp-connection/index.js:809:19)\n at SMTPConnection._onError (/app/node_modules/nodemailer/lib/smtp-connection/index.js:795:20)\n at Timeout.<anonymous> (/app/node_modules/nodemailer/lib/smtp-connection/index.js:237:22)\n at listOnTimeout (node:internal/timers:581:17)\n at process.processTimers (node:internal/timers:519:7)",

"message": "Connection timeout",

"code": "ETIMEDOUT",

"command": "CONN"

- And it doesn’t describe what parameters should be set for Postfix

# Postfix service configuration

POSTFIX_myhostname=<MY DOMAIN>

# If you need to generate a list of sub/DOMAIN keys

# pass them as a whitespace separated string <DOMAIN>=<selector>

OPENDKIM_DOMAINS=<MY DOMAIN>=peertube

# see https://github.com/wader/postfix-relay/pull/18

OPENDKIM_RequireSafeKeys=no